Unlocking AI Voice with Azure Speech Services

Currently, at hyper-speed in the digital era, voice technology stands as a major force capable of defining newer avenues of interacting with devices, services, and data. More and more, businesses are turning to Voice AI Systems to automate operations and offer enhanced customer experiences-from virtual assistants to automated customer support, transcription, and real-time language translation. This metamorphosis has been brought on by Azure Speech Services, an intelligent cloud platform by Microsoft consisting of state-of-the-art speech tools for voice-enabled applications across all platforms and markets.

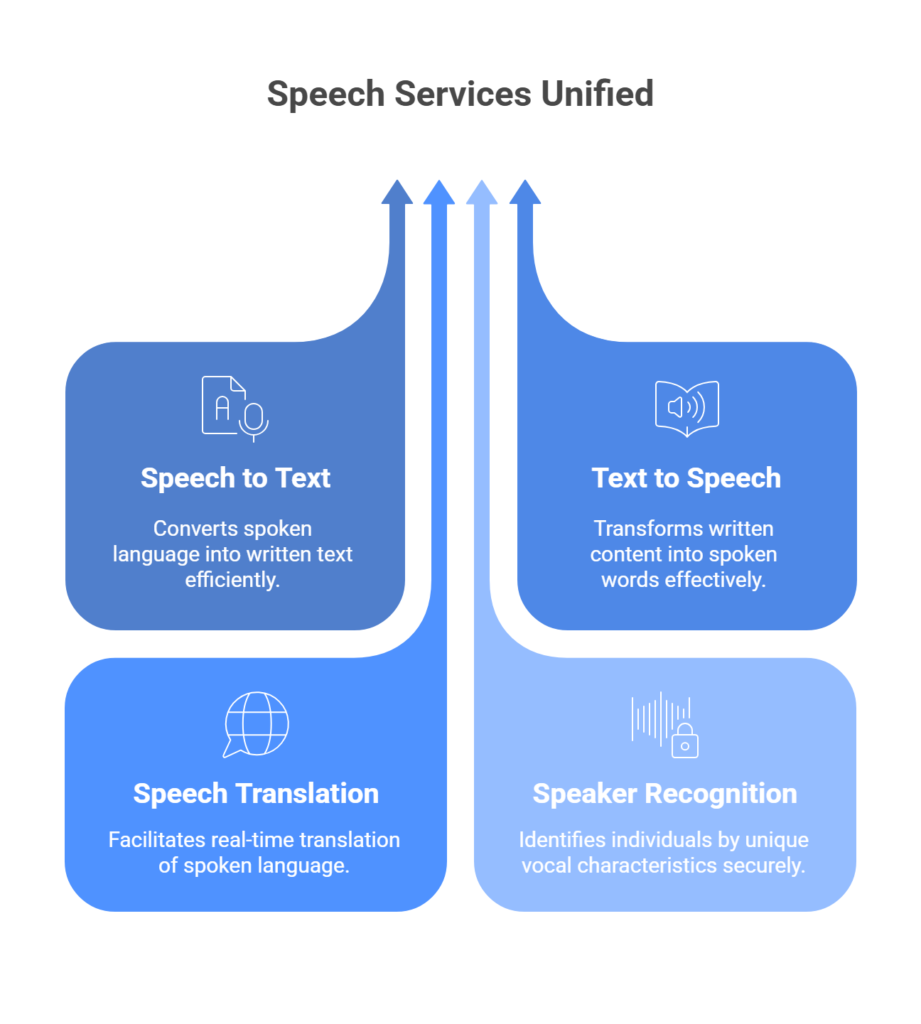

Speech Services from Azure are common to all the scenarios mentioned above, whether creating a voice bot or transcription during meetings or a multilingual assistant, developed with Microsoft’s AI excellence, incorporating speech-to-text, text-to-speech, speech translation, and speaker recognition capabilities. They scale well and provide high security, with seamless integration into some other Azure services, providing an unparalleled toolkit to enterprises, startups, and developers.

Next, we’ll walk you through everything you need to know about the Speech Services, including core capabilities, customization options, possible real-world applications, and implementation methodology. This guide is intended to serve users new to voice AI who want their current solutions to develop further, helping users realize the true capabilities of voice technology through Microsoft’s powerful cloud platform.

Table of Contents

Key Capabilities of Azure Speech Services

If voice is the lock, then Azure Speech Services is the key that can open infinite possibilities of voice interaction. With this, we have the rich power and agility that transcription offers to gripping customer experiences and personal human voice synthesis. It consists of a modular architecture allowing developers to pick whichever service best fits their product-vertically or business needs-so that they will never have to set up a complex voice infrastructure.

Azure’s Speech Services provide top-notch capabilities for converting spoken language into text, generating near-human voice output, translating spoken words from one language to another, and identifying speakers in real-time. These can be incorporated into mobile apps, web platforms, enterprise tooling level, and even IoT devices-the possibility of which is quite daunting.

The following are the primary Speech Services use cases:

a. Speech to Text

Speech to text lets developers transcribe spoken audio into real-time or post-event human-readable text. Due to the use of deep neural networks and language modeling, this application is pinpoint accuracy for uses-from call center analytics to meeting transcriptions, voice note applications, and the sort. The Azure speech to text hence allows integration with existing systems smoothly, works across various languages, and provides automatic punctuation and speaker diarization for more effortless comprehension and analysis.

b. Text to Speech

This provides any text-based information related to the Azure-powered Speech Services using the advanced neural network text-to-speech technology. It supports the widest variety of voices and is capable of changing tones and accents to fit your branding or localization requirements. You can use it to power your voice assistant, IVR system, or even accessibility tool for the vision impaired. There are options available for customization, including speaking style, and developers can imbue the pronunciation, pitch, and pace with a level of personality that enhances humanistic design elements in automated interactions.

c. Speech Translation

On a global scale, speech translation provides oral translation of speech into either speech or text. To address a multilingual audience or provide them services, the enterprise need not hire a team of interpreters or develop language-specific solutions. Azure Speech Services support translation between dozens of languages and dialects, including several types of language outputs, either text or speech synthesis.

d. Speaker Recognition

Speaker Recognition recognizes and verifies speakers to differentiate between different individuals using voice biometrics. It is equity valuable wherein voice authentication is required for enabling processes such as giving secure access to a banking institution or voice-controlled operations in a smart home. It also perceives multiple speakers in an environment, enriching user-based personalized interactions with enhanced security.

Explore AI Solutions Now

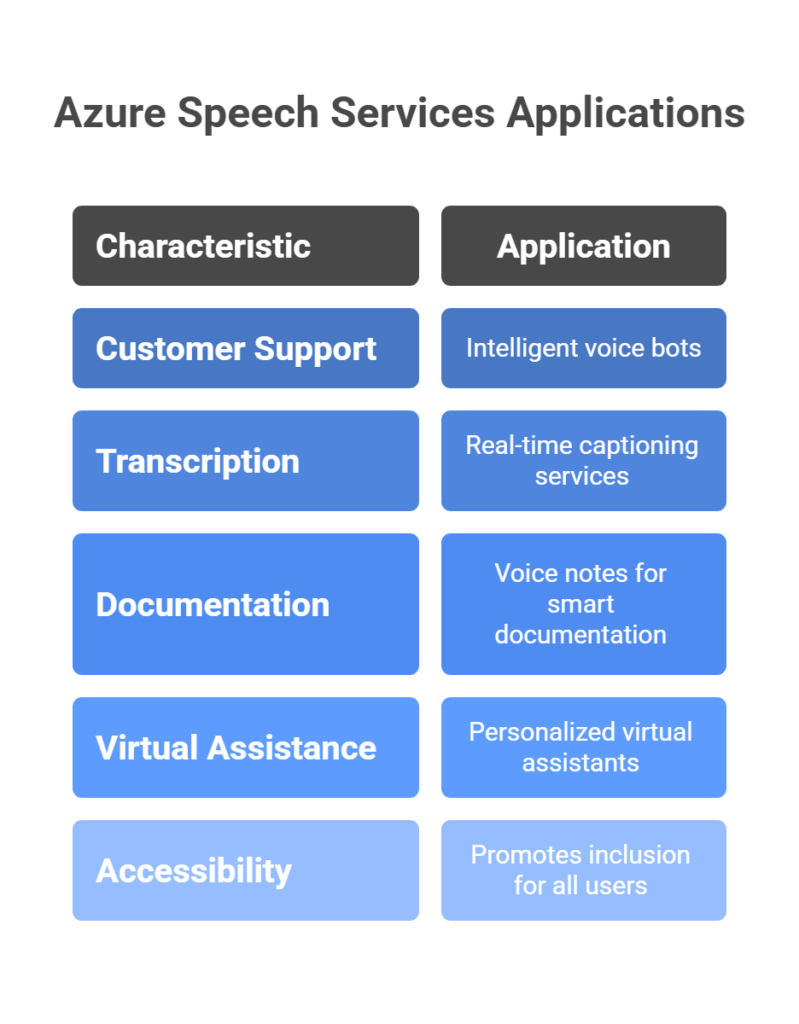

Azure Speech Services in Action: Real-Life Applications

With the rise in the popularity of voice AI, companies belonging to almost all sectors have started seeking real-time status of speech technologies to automate their processes. Azure-driven Speech Services give businesses an opportunity to build experiences that are more intelligent, faster, and more accessible with intelligence-enabled voice. For example, in real life, some really cool real-time uses of Azure’s voice tools involve considering customer service and real-time documentation.

What indeed makes the services so worth it is their cross-industry range of usage scenarios.

For instance, whether you are in healthcare, finance, retail, or education, these services will support you in automating voice-driven tasks, reducing operational costs, and maximizing the engagement of the end-users. Backed by strong APIs, low latency, and enterprise-grade security, enterprises now have the confidence to roll out voice AI at scale.

a. Intelligent Customer Support and Voice Bots

One of the most common commercial applications of Azure voice AI has been customer-service automation. The businesses have adopted speech-to-text- and text-to-speech-based services to produce conversational bots that address inquiries 24×7. These bots reduce the waiting time, elevate user satisfaction, and liberate human agents from facing complex issues.

b. Real-Time Transcription and Captioning

Meetings, webinars, and live events benefit from the Speech Services-powered real-time transcription and captioning. It gave access to hearing-injured users and kept accurate records of compliance and documentation. Enterprises use this feature in Microsoft Teams and other productivity platforms.

c. Voice Notes and Smart Documentation

Healthcare, legal, and field service professionals use Azure-powered tools to dictate notes for transcription. It saves time, reduces errors through manual entry, and lets users focus on the work at hand. When paired with Speech Services by Azure, the tool offers a transcription with high accuracy levels and ensures secure handling of confidential data.

d. Personalized Virtual Assistants

Enterprises and app developers are engineering virtual assistants that follow contextual understanding, respond naturally, and even modify the tone of its response depending on how the user behaves. These personalized virtual assistants make use of the full use of this service to provide uninterrupted interaction across the web, mobile, and IoT devices.

e. Accessibility and Inclusion

Voice AI is a boon to users with visual or physical impairments. Microsoft Speech Services enables developers to build screen readers, speech-driven navigation tools, and voice-controlled systems, thereby increasing digital accessibility. These voice AI solutions not just empower inclusion but also widen the user-base by making applications universally usable.

How Azure Speech Services Work Under the Hood

Building intelligent voice applications requires more than just high-level APIs: a huge value is placed upon having a robust and scalable infrastructure for real-time audio processing, secure data transmission, and voice-customized experiences. Microsoft Speech Services, therefore, come into their own, with the massively scalable backend infrastructure that handles the complexities of speech technology while delivering enterprise-grade performance.

Since the product runs on Microsoft’s global distributed cloud infrastructure, Azure Speech Services are able to provide low-latency audio processing services, state-of-the-art ML models, and secure APIs: developers also enjoy the luxury of incorporating speech features into their apps without worrying about hardware setup, model training, or data pipelines. Everything is ready, from design prototypes to production, for rapid development, high reliability, and great flexibility.

Now let’s analyze the core components toward supporting Azure-powered Speech Services.

a. Azure Speech SDK

The Azure Speech SDK enables developers to embed voice experiences in their apps across platforms in Windows, iOS, Android, and JavaScript. It directly supports speech to text, text to speech, translation, and speaker recognition. Internally it supports keyword spotting, audio stream customization, and input/output fine-grained control. This makes it ideal for building voice interfaces that respond across devices.

b. Azure Speech API

The Azure Speech API provides RESTful endpoints for third-party access to Microsoft Speech Services, thus obviating the application developers’ dependence on the local SDK. This fits very well into lightweight and serverless deployment scenarios, even browser-based interactions. Developers transcribe audio files using the API, synthesize speech, and authenticate the speaker with little configuration while maintaining full flexibility, performance as well as security standards.

Customizing Voice Output with Azure Speech Services

In this era of personalization, brands want unique, human-like experiences for their customers to be built. Voice is a big part of that. They allow developers and businesses to move away from generic robotic speech and provide tools to create natural, expressive voices, or even branded voices, for a specific application or audience.

The ability to control how an application sounds can really boost user engagement, universally applied in the creation of virtual assistants, IVR systems, or educational tools. With neural voices, they offer ready-made voice solutions from Microsoft and the ability to create a custom voice, enabling any brand to carve out a voice for itself.

Let’s delve into the details of it now.

a. Neural Text-to-Speech and Voice Personalization

Enabled by deep learning models, the neural text-to-speech engine of Azure Speech Services can generate startlingly realistic human voices. The voices come with a plethora of emotions, styles, and prosody-well suited for storytelling, training modules, and interactive applications. Additional fine controls include pitch, speed, and intonation concerning pauses in speech.

b. Azure Custom Voice

If your company intends to go for differentiation by voice identity, then Azure Custom Voice allows you to train an AI voice synthesizer from your proprietary voice samples. It fits perfectly in a virtual assistant, branded customer support agent, or even an audiobook narrator possessing a particular voice style-interesting in the uniqueness of that brand’s personality-as distinguished from a normal off-the-shelf voice that might be offered by the vendor, as privacy, consent, and quality-to mention-but to this uniquely tailored audio experience.

Getting Started with Azure Speech Services

Maybe you have had plans to create voice applications sometime in your thoughts. This is exactly what Azure’s Speech Services can make easy to start with, and the developer experience is pleasant. Whether setting up your voice interface or incorporating speech into a solution for enterprise-level projects, getting started with Microsoft’s speech technologies is very straightforward.

Azure’s Speech Services provides intuitive tools, rich documentation, and various integration paths to get your team from idea to production with minimum friction. Now that you are ready to hit the ground running, here are the step-by-step instructions to set up:

a. Set up your Azure environment

First, you will need to have a valid Microsoft Azure account. Then, once logged in, jump into the Azure Portal and create a new Speech resource under the AI + Machine Learning category. This will give you keys for your subscription and region information to use as an insight to authenticate your application against Azure-driven Speech Services.

You will also be able to see your quotas with usage metrics, billing information, plus other very familiar stuff in your Azure dashboard, paving the way for full control and visibility onto your deployment.

b. Choose Your Development Approach:

Interacting with these services can basically be done in two ways: either through SDKs or REST APIs. If you wish to build apps across either web, mobile, or embedded devices, SDKs provide an almost seamless integration. For lightweight or serverless types of usage, REST API would be the viable choice.

c. Say hi to Azure Speech Studio

The Azure Speech Studio is a web UI where devs and even non-devs can put Speech-to-Text, Text-to-Speech, Translation, and Voice Customization features to the test. You are able to preview the functionality, tune the voice, upload datasets, and export the configurations to the actual production use-all without writing a single line of code.

d. Your very first project: Audio to Text!

The best thing to do is build a very basic transcription application that will allow you to upload an audio file and get back the text, via the SDK or REST API, or any other means accompanied; it is fantastic insight into how Azure Speech to Text works and the accuracy of it.

Talk to an Azure AI Expert

Integration with the Microsoft Ecosystem

The very integration with the third-party ecosystems that put Microsoft Speech Services on top. The shared platform Microsoft offers enables effortless embracement of speech capabilities into third-party stack-interactions, customer experiences, productivity, or business workflows.

Azure Speech Services grant voice-enabled innovations from Dynamics 365 and Power Platform into Teams and Office 365 to enhance usability, accessibility, and automation–shortening the development time and ensuring consistent user experience across enterprise applications.

Now, let’s see where this family of services sits within the greater Microsoft Cloud landscape.

a. Power Platform integration

Power Apps and Power Automate empower both developers and business users in adding voice features with little coding required. Using built-in connectors and AI Builder, you can set up workflows triggered by speech commands, voice transcription, or sentiment analysis, all powered by Azure Speech Services.

b. Dynamics 365 and Microsoft Teams

Speech capabilities within customer engagement tools such as Dynamics 365 transcribe calls, analyze customer sentiment, and automate note-taking. Microsoft Teams makes use of real-time transcription and closed captions during meetings to enhance collaboration and accessibility.

These enhancements are served by Microsoft Speech Services, the rich ensemble that delivers speech functionalities across Microsoft products-so as to guarantee close integration, performance optimization, and unified AI governance.

c. Consistency Across Cloud Services

Engaging these speech services alongside other Microsoft offerings, such as Azure Bot Service, Azure Cognitive Services, and Microsoft Graph, lets you create full-fledged voice-enabled solutions that are intelligent, secure, and scalable from day one.

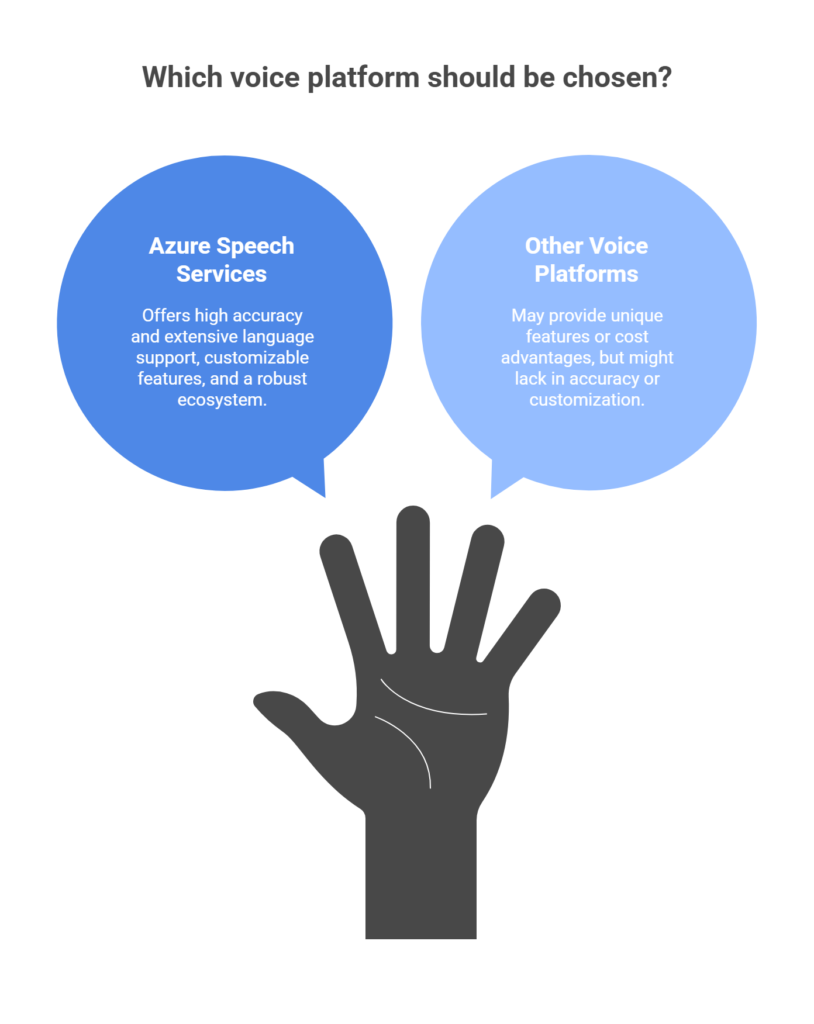

Comparing Azure Speech Services with Other Voice Platforms

Because speech recognition tools are available from multiple cloud providers, choosing the sound platform for one’s needs can be a bit tricky. Speech Services have distinguished themselves by being enterprise-ready, secure, and flexible-desirable qualities for companies needing production-grade voice solutions.

While Amazon Transcribe and Google Cloud Speech-to-Text all do a fair job, Azure Speech Services have advantages with integration, customization, and local language support. Also, Microsoft’s emphasis on fair AI and accessibility presents itself well to organizations with ethical concerns on AI.

a. Accuracy and Language Support

High-grade transcription and synthesis are provided by Azure over a huge variety of languages and dialects. It includes support for different kinds of speech: accents, and even simultaneous or interspersed speech in two languages. Its use of neural networks allows better contextual understanding and punctuation.

b. Customization and Flexibility

The few services would allow the degree of voice customization Azure Speech Services provide, especially in combination with Azure AI tools. Developers have control over the training of custom voices, terrorist options for how pronunciation and speech style are fine-tuned.

c. Ecosystem and Developer Tools

Because of its tight integration with Microsoft’s cloud, DevOps, and productivity tools, Azure presents a familiar development experience for those already familiar with the Azure ecosystem. The ready availability of SDKs, APIs, and prebuilt tools also reduces the time from prototype to production.

The Role of Azure Cognitive Services in Speech

To truly understand Azure’s Speech Services capabilities, one must view them as part of a larger AI ecosystem-Azure Cognitive Services. This array of intelligent APIs and SDKs from Microsoft allows developers to add vision, language understanding, decision-making, and speech capabilities to their applications. One thing that makes this platform effective is its ability to provide enterprise-grade AI capabilities while also dispensing with the requirement for deep machine learning expertise.

Azure Speech Services along with the Cognitive Stack

It are essentially Azure Cognitive Services’ voice technology. They include speech-to-text Azure, text-to-speech, speech translation, and speaker recognition capabilities. These services intend to help developers craft applications that offer rich, naturalistic voice interaction experiences to users. With heavy lifting copyrighted by Microsoft with its cutting-edge models and infrastructure, developers can now build applications that listen, speak, and understand in real time.

Building Smarter Applications Combining the Two

One big advantage of the Cognitive Services from Azure is that they are modular. Different services can be used in concert to create intelligent and personalized workflows. For instance, a virtual assistant may use Azure Speech Service to convert audio into text; it then uses the language understanding services to infer intent, then applies text analytics to infer sentiment. Finally, the assistant can create a spoken response via text-to-speech. Inherently, this builds an end-to-end intelligent system that communicates as naturally as a human.

Interoperability that Reduces Complexity

All Azure Cognitive Services, including Its Speech Services, build upon the same infrastructure to ensure simplified integration. This common platform also allows for consistent authentication, resource management, and usage monitoring. Developers can manage everything through one common interface rather than juggling services, thus deploying their applications fast and building scalable solutions without putting together disparate tools. This implicit interoperability also propels faster development cycles without compromising simplicity in architecture.

Secure, Compliant, and Enterprise Ready

Security and compliance are some of the most important aspects in Azure AI. Azure speech services, as other cognitive services, have been designed to comply with strict regulatory requirements including the likes of GDPR, HIPAA, and ISO. With varying capabilities such as encrypting data processing, role-based access control, and adjusting data retention policies, customers can rest assured that there are no compromises on privacy or compliance as they embark on voice AI transformation.

From Voice Interaction to Full Cognitive Intelligence

When Azure Services of speech are combined with other cognitive APIs, simple voice features become full cognitive systems. The applications can see, hear, understand, and respond intelligently. This spans opportunities in various industries-the customer support chatbots can figure out when frustration is building in the caller’s voice, whereas the education platforms adjust their delivery according to the learners’ response to a question. The association of Speech Services with the greater suite of Cognitive Services delivers for these applications effortlessly.

Essentially, These Speech Services realize their fullest potential when they are incorporated as part of the cognitive stack of Microsoft. Together they allow an engineer to build humanistic experiences where machines do not just talk but also think, learn, and adapt.

The Future of Voice AI with Azure

With businesses being more reliant on digital experience, there is a huge demand for better voice technology. Azure’s Speech Services give a very good push in becoming the leader in this transition with its continuing innovation in real-time processing, multilingual support, and voice personalization. On the basis of Microsoft’s solid research in deep learning and natural language processing, this platform is growing so fast, so as to take the future of voice AI and put it into daily life.

Next-generation Speech Services from Azure may, therefore, provide better contextual awareness, including emotional sensitivity and cross-language features; thus, creating more human voice interfaces by their organizations would be socially aware and emotionally reactive.

a. Multilingual and Context-Aware Models

Microsoft is pushing language models further to better respond to code-switching, regional dialects, and context-aware speech. A whole lot of applications in global and inclusive user experiences are now at play that mirror how people naturally communicate.

b. Responsible and Ethical Voice AI

With an increasing spotlight on AI ethics, Microsoft is continuously working to apply responsible AI principles to Azure Speech Services to promote transparency, privacy, and informed consent with voice-empowered applications. This way, organizations can build powerful solutions while maintaining user trust and complying with regulations.

c. Rise of Azure AI Speech

These improvements are driven by the innovations behind the Azure AI Speech, the R&D backbone of Microsoft’s speech technologies. This is front-line speech recognition, synthesis, and comprehension that underpins intelligent tools throughout the Microsoft ecosystem and beyond.

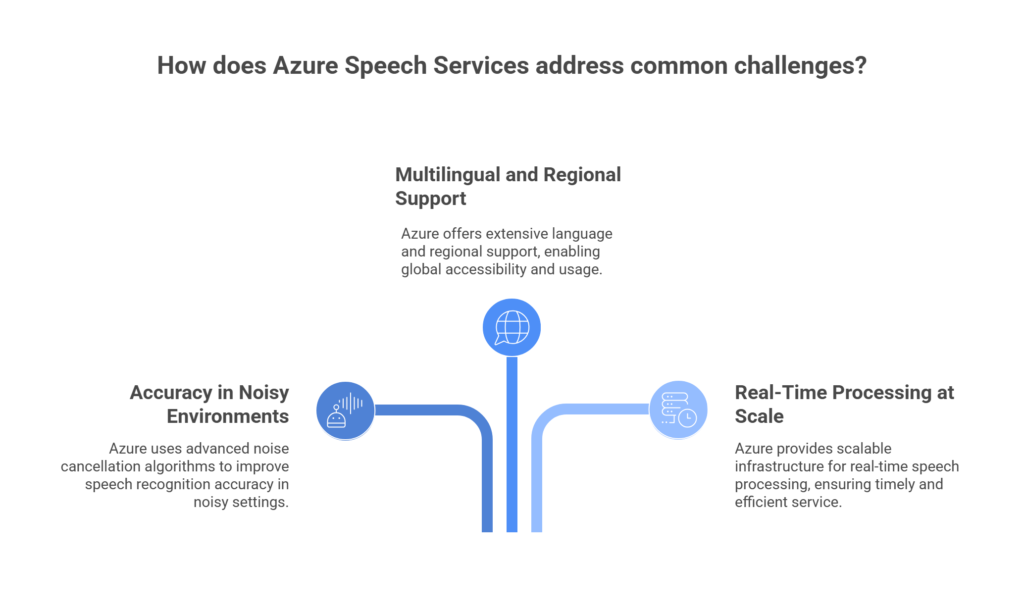

Common Challenges and How Azure Speech Services Solves Them

Adopting voice AI is thrilling; however, there tend to be technical and operational hurdles. Azure Speech Services tackle many of these issues directly:

a. Accuracy in Noisy Environments

Real-world situations are marred by the noise of continuous chatter, street sounds, and the thunder of a passing train. Enhanced acoustic modeling, and noise suppression features developed by Azure, allow transcription to be carried out with high accuracy levels, no matter if it’s a highly populated setting or an open one.

b. Multilingual and Regional Support

Many of the other platforms fail when confronted with the challenge of regional accents or code-switching from one language to another. Regional dialects and multilingual models are supported by Azure Speech Services, which make voice interactions more inclusive and culturally appropriate.

c. Real-Time Processing at Scale

Processing live audio streams at scale is always a factor-heavy exercise on resources. Azure’s cloud infrastructure and real-time APIs provide an efficient setup for processing large volumes of speech data without delay or capacity hitch.

Conclusion

Azure Speech Services allows businesses to engineer intelligent, secure, scalable voice-enabled solutions. Deeply woven into Microsoft’s ecosystem, and equipped with multilingual and deep AI support, they turn basic voice features into rich user experiences in Microsoft way. Hence, these services are suited for startups and enterprises alike and are the founders of future Voice AI.

As organizations continue to leverage AI to enhance business operations, exploring the full potential of Azure’s ecosystem becomes crucial. If you’re interested in understanding how Azure Cognitive Search is shaping the future of intelligent search, don’t miss our detailed blog: Why Azure Cognitive Search Is the Future of Intelligent Search?. It will give you deeper insights into the evolving role of AI-driven search solutions.

If you are looking for Azure Speech Services, you can visit us here.

FAQs

1. What is Azure Speech Services used for?

These are used for speech-to-text, generating lifelike text-to-speech, translating spoken language, and recognizing speakers.

2. Can I create a custom voice using Azure?

Yes, with Azure Custom Voice, you can create a voice model trained on your own data.

3. Is Azure Speech Services free to try?

There is a free tier offered by Microsoft for anybody to try them, which includes limited usage for transcription and synthesis.

4. Which programming languages does Azure Speech SDK support?

The Azure Speech SDK supports C#, Java, JavaScript, Python, Objective-C, and C++.

5. How secure is Azure Speech Services for enterprise use?

Azure Speech Services are based on Microsoft secure cloud infrastructure and basically support conformity with standards such as GDPR, HIPAA, and ISO.